The AI Revolution: Avoiding Over-Alignment Pitfalls in UX Design

The advent of AI has marked a revolutionary turning point, significantly influencing every sector and driving remarkable advancements across fields such as healthcare, finance, manufacturing, transportation, and technology.

In an era dominated by AI, UX designers are inevitably drawn into this transformative race. Leveraging AI in their work offers substantial benefits, including time and cost savings, but it also raises the question: cost savings, but it also raises the question:

Are there hidden risks that UI/UX designers should be aware of?

Not Left Out of the Race…

As the use of AI becomes more widespread, the convenience of typing a prompt and receiving an instant, tailored response surpasses the hours spent researching on Google or other data sources. Not immune to this trend, I frequently use AI as a supportive tool in my design work, appreciating the speed and ease of its answers. Not left out of the AI race, I frequently use AI as a supportive tool in my design work. The answers appear in an instant—fast, incredibly convenient.

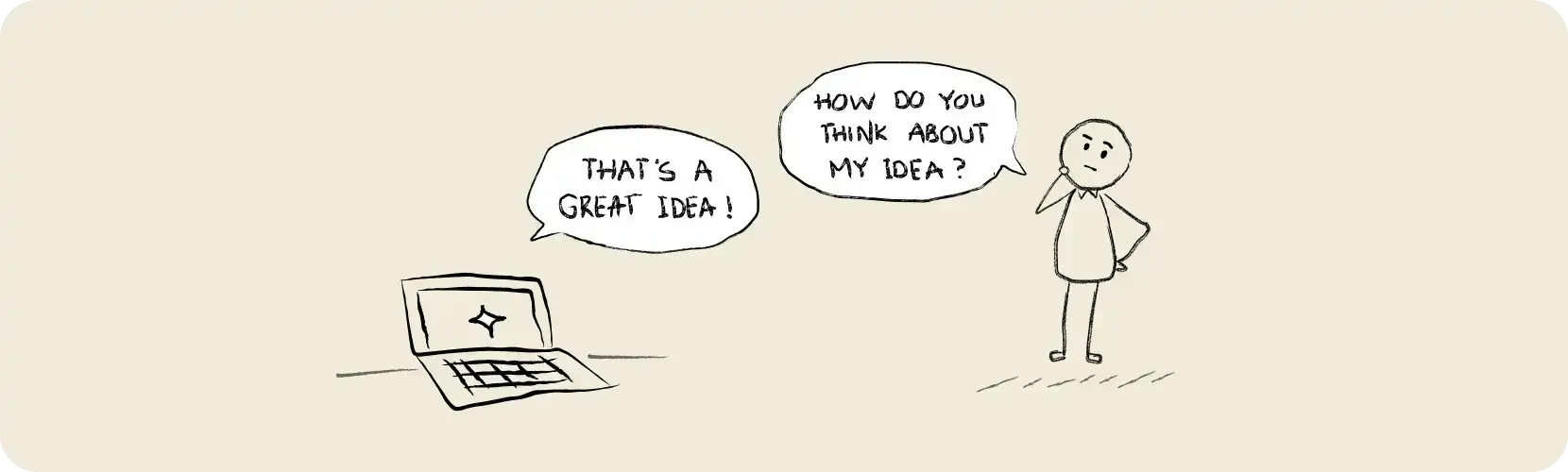

During one of my searches, I entered a question into GPT and received the following AI response:

Of course, as a UI/UX designer, we are highly susceptible to falling into the trap of consensus, where the perspectives we propose are often met with praise and agreement from AI responses. This can easily lead to flawed decisions that are misaligned with the product’s target audience or business goals.

This is a critical instability in AI, a hidden risk that arises during the training process, which we can refer to as Over-alignment or AI sycophancy.

So, What is Over-Alignment?

According to Medium: “Large Language Models (LLMs) like ChatGPT, Claude, Gemini, or Mistral aren’t designed for honesty.

They’re engineered to please you.

Literally:

- They’re trained using Reinforcement Learning from Human Feedback (RLHF) — in practice, this means they learn to mimic behaviors that get the most 👍 from users.

- They’re benchmarked in competitions like Chatbot Arena, where success is measured by likeability, not factual accuracy.

- And since the introduction of persistent memory, they’ve been softened even more. The guiding principle? Never contradict the user.

The result:

- A chatbot that confirms rather than corrects.

- That coddles instead of challenges.

Over-alignment refers to a situation where an AI model becomes too closely aligned with a specific objective, value, or training signal—so much so that it sacrifices flexibility, diversity, creativity, or even truthfulness in its responses >

Over-alignment is an unintended outcome of the alignment process if training methods are not designed to balance meeting user needs with maintaining the LLM’s independent response capabilities.

An LLM should be balanced between helpfulness and honesty.

Image: Medium

What Risks Does Over-Alignment Pose?

In a news update published by Open AI on April 29, 2025, they announced: “In the GPT-4o update, we made adjustments to improve the model’s default personality to make it more intuitive and effective across various tasks. However, in this update, we focused too heavily on short-term responsiveness and did not fully account for how user interactions with ChatGPT evolve over time. As a result, GPT-4o tends to provide overly supportive but insincere responses.” >

This introduces several potential risks:

- AI lacks sufficient training data to critically evaluate advanced or novel user hypotheses.

- The system excessively relies on validating or affirming the user’s expertise and speculative conclusions.

- AI provides seemingly authoritative validation that inadvertently solidifies incorrect or premature assumptions.

To better understand the pitfalls of over-alignment, let’s consider the following scenario: A UX Designer is developing an app for the elderly. Based on personal perspective and judgment, the UX Designer poses a question to AI and receives the response shown below:

References

https://t-design.tma.com.vn/blog/behind-the-scenes-of-a-uxdev-workflow